With the seemingly unending debate about the debt ceiling raging, I wanted to highlight a side issue that’s been burbling up in the United States over the past several decades: that of income inequality.

With the seemingly unending debate about the debt ceiling raging, I wanted to highlight a side issue that’s been burbling up in the United States over the past several decades: that of income inequality.

If we follow this way, way back, the widely-accepted story is this: before the agricultural revolution took hold, bands of foragers had little to differentiate each other. There was no concept of “income,” and while no doubt certain leaders may have commanded respect, provisions were divided more or less equally.

We see the rise of inequality with the dawn of agricultural civilizations, where food surpluses enabled delineation and differentiation between people (interestingly enough, it’s also now considered to be the time when what we consider traditional mores — marriage, monogamy, sexual conservatism — took root). With tribal chiefs commanding armies and other forces of social coercion, it was only a matter of time before rulers became monarchs and descendants of great military heroes became kings and nobility. This was the Faustian bargain we humans created for ourselves at the dawn of civilization: in exchange for predictable patterns of settlement and food production, most of us would enjoy fairly meager fruits of the society’s benefits; most of the reward would go to the rulership. In its most extreme form, the lowest elements of society — or those captured from other societies in massive wars — would become property themselves, forced to work with no reward at all. We call this concept slavery.

The division between rulers and commercial interests also began early; while kings and emperors may have held sovereign political power, there still needed to be a fungible medium of exchange by which services and goods could be traded. We have a name for this concept: money. While there had long been merchants and trader classes, it was really only in the Renaissance and the Enlightenment, when Europe, emerging from centuries of stagnation and capitalizing on advances from other places, took the lead and began (for better or worse) to explore and colonize the world. This was, in a sense, the first widespread philosophical uprising against concepts such as the divine right of kings, and held that the mercantile class was entitled to share in societies riches as well. With industrialization hyper-accelerating the ability for capital to be deployed and engaged (whereas before much was tied to land and agriculture, which were held largely by monarchs and the nobility), we entered the modern age with a new term: Capitalism.

The division between rulers and commercial interests also began early; while kings and emperors may have held sovereign political power, there still needed to be a fungible medium of exchange by which services and goods could be traded. We have a name for this concept: money. While there had long been merchants and trader classes, it was really only in the Renaissance and the Enlightenment, when Europe, emerging from centuries of stagnation and capitalizing on advances from other places, took the lead and began (for better or worse) to explore and colonize the world. This was, in a sense, the first widespread philosophical uprising against concepts such as the divine right of kings, and held that the mercantile class was entitled to share in societies riches as well. With industrialization hyper-accelerating the ability for capital to be deployed and engaged (whereas before much was tied to land and agriculture, which were held largely by monarchs and the nobility), we entered the modern age with a new term: Capitalism.

Born in the Enlightenment, capitalism’s tenets seem on the surface to be quite noble: the de-emphasis of peerage and inherited titles as a delineator of wealth and influence; the notion of “careers open to talent,” where one’s ability determined one’s standing. When the West’s newest nation in the late-1700s, founded largely by former subjects of one of Europe’s most mercantile-friendly powers, outlined their raison d’etre in the country’s founding document, they more or less distilled these notions into a single, catchy phrase:

“All men are created equal.”

I’m sure that was heady stuff for 1776. So heady, in fact, that its underlying meme of “equality” caught fire around the world. The delineation was eventually expanded to include women, provide redress for the persecution of minorities, and birth entire philosophical concepts regarding the equality of peoples. But what its underlying “free market” system didn’t do is make the world less unequal. Where once we had emperors, now we had captains of industry; where once kings ruled nations, now robber barons commanded corporate trusts. Beginning in the early-to-mid 1800s in Britain, and culminating with the Gilded Age in ascendent America at the turn of the 20th century, writers and social activists from Dickens to Twain to (yes) Marx and Engels pointed out the alarming trend: under classical economics, a small number of smart, greedy, fortunate folks ended up in much the same place as kings of old… with the remaining populace forced to forage for the remaining scraps. Not literally, of course, but in a more figurative, modern sense: working with a system that was rigged against them, workers endured horrific conditions and low pay with little redress or recourse. The legal system was bought and paid for by the rich. A new aristocracy was born.

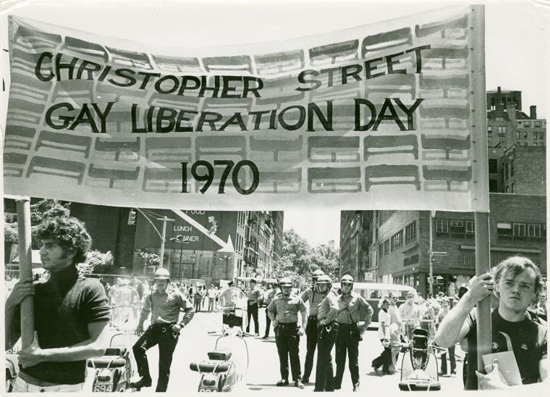

Of course, the huddled masses fought back, and over the middle decades of the twentieth century, until well into the 1970s, unprecedented new rights and benefits emerged. Between labor unions, old-age pension plans such as Social Security, grants and loans to attend college, and (in America) the prosperity of being the sole Western nation standing after World War Two and a net petroleum producer, the 1950s and 1960s were, in a sense, a Golden Age of socio-economic equality. As I wrote in my last piece, right-wing nostalgia for the 1950s obscures the fact that this was the most “socialist” era in the nation’s history. The income divide was the narrowest it had ever been, and tax rates on the wealthy were much, much higher than today.

Then, of course, everything changed. The reasons for this are many, but from what I’ve been able to piece together, a lot of it rests with Ronald Reagan. Although earlier in his movie career he was actually something of a liberal — head of the Screen Actors Guild, he was pro-union — his work as spokesperson for General Electric and its cadre of old-line pro-business conservative executives slowly changed his mind. He spent his years riding trains across the country and honing his speech on the evils of government regulation… so much so that the breakthrough speech he gave at the 1964 Republican National Convention was a near-clone of that which he’d given during his years at GE. This galvanized a movement and utterly changed the trajectory of young activism: whereas sixties radicals promoted free love and equality, by the 1980s the vanguard of the young politico was arguably fictional character Alex P. Keaton on the sitcom Family Ties (played, ironically, by a rather liberal Canadian, Michael J. Fox). I came of age in that era and between the cultural zeitgeist, a life lived in a corrupt, overtaxed and inefficient Canadian province; and some decidedly neo-conservative parents, I’d come to accept that the old liberal warhorses of unionization and social welfare were bunk. The future lay in the past.

Then, of course, everything changed. The reasons for this are many, but from what I’ve been able to piece together, a lot of it rests with Ronald Reagan. Although earlier in his movie career he was actually something of a liberal — head of the Screen Actors Guild, he was pro-union — his work as spokesperson for General Electric and its cadre of old-line pro-business conservative executives slowly changed his mind. He spent his years riding trains across the country and honing his speech on the evils of government regulation… so much so that the breakthrough speech he gave at the 1964 Republican National Convention was a near-clone of that which he’d given during his years at GE. This galvanized a movement and utterly changed the trajectory of young activism: whereas sixties radicals promoted free love and equality, by the 1980s the vanguard of the young politico was arguably fictional character Alex P. Keaton on the sitcom Family Ties (played, ironically, by a rather liberal Canadian, Michael J. Fox). I came of age in that era and between the cultural zeitgeist, a life lived in a corrupt, overtaxed and inefficient Canadian province; and some decidedly neo-conservative parents, I’d come to accept that the old liberal warhorses of unionization and social welfare were bunk. The future lay in the past.

What I think none of us could have anticipated (though in retrospect we should have) is the unintended consequences of that shift. With deindustrialization, de-unionization, rising health-care costs, and the build-up of the financial industry, the income divide in America is now the greatest it’s been since the Gilded Age of a century ago — and it’s as great in America as it is in Third World nations such as Ghana and Uganda.

But the nagging question this begs is: so what? Is there anything inherently “wrong” with a great income divide, if that’s the way the market works things out? In the free-market fundamentalism espoused by the conservative set, this is just the way of things. Some go even further, as a fellow I knew used to state, by claiming that “poor people create their own drama.” Another claimed “there will always be this divide; there’s nothing we can do about it” — a worldview I’d seen echoed by commentators such as George Will. These people point — rightly sometimes — to the hypocrisy, inefficiency and corruption of government programs, and claim this is the best we can do. To the poor, to paraphrase that New York Daily News article about a then-bankrupt New York, these people say: drop dead.

I’ve long rejected that worldview, which I guess in the American sphere tars me as a “liberal.” For one thing, I simply refuse to accept that what we’ve got is “as good as it gets,” and any attempts to improve our collective lot are doomed to failure. For another, there’s a deep-seated emotional notion I have about inequality that has long guided me — and many others, I suspect, in their life philosophies.

I keenly remember, as a boy growing up in a competitive, nouveau-riche suburb, the materialist one-upsmanship that marked my community. One school I went to imposed a uniform dress code — not out of some vague notions of Dead Poets Society tradition but rather to prevent a materialistic fashion show from transpiring among pre-teens. Sitting on a lakeside dock one summer with four other boys, all of us age ten, I was treated to each kid bragging unashamedly about their fathers’ weekly earnings.

But is that all that disdain of income inequality is — childhood jealousy? I would argue that it’s the very roots of this jealousy and resentment that are worth examining. While misapplied resentment can lead to a host of ills, the feeling probably has root in an adaptive mechanism to regulate how we treat each other. While many primate societies possess hierarchical structures — baboons are the prime example — there are others that do not, such as bonobos. “Might makes right” is not an absolute, and I feel that these deep-seated feelings of disapproval and resentment at great wealth have their roots in our deep-seated desire for a world more like the one we’ve long abandoned — the forager world of bands of equals.

While I’m not suggesting we return to the African savannah, I believe that our post-industrial civilization offers the opportunity to reclaim what the past millennia have denied us: a more equal world. The income divide is an arbitrary measure we have chosen to impose upon ourselves, and while I fully support rewarding those who work harder and take the incentive, I feel our reward mechanism has, in America at least, gotten seriously out of kilter. It’s for that reason that the battle between liberal and conservative in America has gotten so pitched — it’s really a fundamental fight between two very different philosophies. It’s difficult, I admit, in a country founded on raw capitalism, for the progressives to be heard as much as they should. But in my view, this is a fight worth having. I know which side I’m on.